The gait analysis (GA) toolkit is a valuable tool for assessing and analyzing human movement patterns. Wearable sensor data, such as accelerometers and gyroscopes, can provide valuable information about an individual's gait patterns. The gait analysis toolkit can be used to process and interpret this sensor data, allowing researchers and clinicians to gain insights into various aspects of human gait. In medical research, researchers can utilize wearable sensors and the gait analysis toolkit to study the kinematics (joint angles, joint velocities) and kinetics (forces, moments) of gait. This information can be used to investigate the impact of various factors on gait, such as injury, disease, footwear, or different walking conditions. In clinical assessment, gait analysis can aid in the assessment of patients with neurological or musculoskeletal disorders. By analyzing the wearable sensor data using the toolkit, clinicians can objectively evaluate gait abnormalities, identify specific movement impairments, and track changes over time. This information can inform treatment planning and monitor the effectiveness of interventions. In fall risk assessment, gait analysis can be employed to assess an individual's risk of falling, especially in older adults. By analyzing sensor data from wearables, patterns indicative of increased fall risk, such as gait variability or instability, can be identified. This information can guide fall prevention strategies and interventions. In rehabilitation, wearable sensors combined with the gait analysis toolkit can be utilized in rehabilitation settings to track and assess the progress of individuals undergoing gait retraining or rehabilitation programs. Objective data on gait parameters can be used to quantify improvements, guide treatment decisions, and personalize rehabilitation protocols.

Objective of GA in this Module

Gait analysis (GA) toolkit is a machine learning-based module developed for detecting common activities of daily living (ADLs), such as walking, jogging, going upstairs, going downstairs, sitting, and standing. The GA toolkit contains a pre-trained model based on the acceleration and gyroscope signals obtained from wearable inertial sensors.

Figure 1: An example of a GA application. Detecting freezing of gait (FOG) in Parkinson’s patients (left) and preventing falls (right).

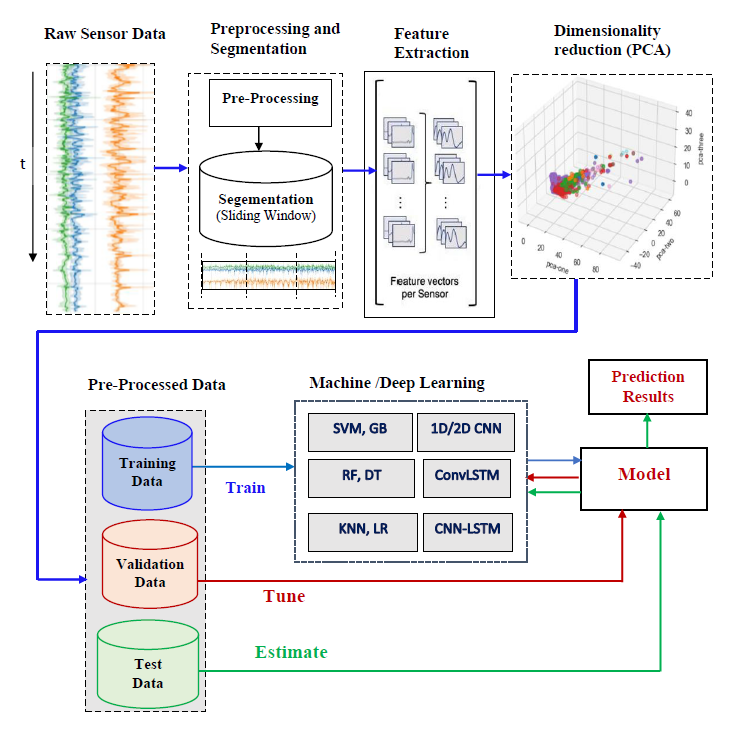

In the development of the GA toolkit, an array of machine learning methods (random forest, support vector machine, decision tree, logistic regression, etc.) and deep learning models (CNN, CNN-LSTM, DeepConvLSTM, etc.) were already trained and compared, among which the CNN-LSTM and random forest have shown nearly equal performance, outperforming all other trained models. Random forest was selected as the benchmark model capable of detecting both the dynamic and static activities at a lower error rate and relatively at a lower cost of computation. Before feeding the data to these models, the necessary preprocessing tasks (such as data cleaning, normalization, filtering, balancing, etc.) were performed on the raw sensor signals. Then, the fundamental step of data transformation into many short segments was performed, where the sensor signals were framed into partially overlapping windows. In the GA module, a window length of 6.4 seconds (128 samples) and an overlap of 1.25 seconds were applied for segmenting the raw sensor dataset. After segmentation, relevant time-domain features are extracted from each window, and the resulting feature vectors were used to train machine learning models. The final predictive model has been tuned to extract the best possible performance using an exhaustive grid search with cross-validation over a defined hyperparameter space. Fig. 1. shows the general process of the GA toolkit development pipeline.

The trained gait analysis module takes sample readings of raw acceleration sensor signals in CSV or text format. The input data contains three axial acceleration values with timestamps and the subject ID. The output of the prediction consists of gait class scores for each of the six ADLs (walking, jogging, going upstairs, going downstairs, sitting, and standing) in JSON format containing the probability estimation of each ADL. The output of the prediction can also produce the gait class with the highest gait score.