FER AI toolkit utilizes the React and Django frameworks to create a user-friendly web application. Based on recommendations from the pilots of the Alameda consortium, the FER AI toolkit focuses on three distinct categories: PD, MS, and Stroke, with specific emotion classes assigned to each category. Separate CNN models have been trained for each category to achieve higher accuracy and improved diagnostic capabilities in identifying and differentiating these neurological conditions. The system is integrated with IAM and SemKG for authentication, data storage, and retrieval, enhancing scalability, performance, and data management. The application offers key functionalities such as category selection from a dropdown menu and image upload for processing, opening up new possibilities for understanding and interpreting human emotions through facial expressions.

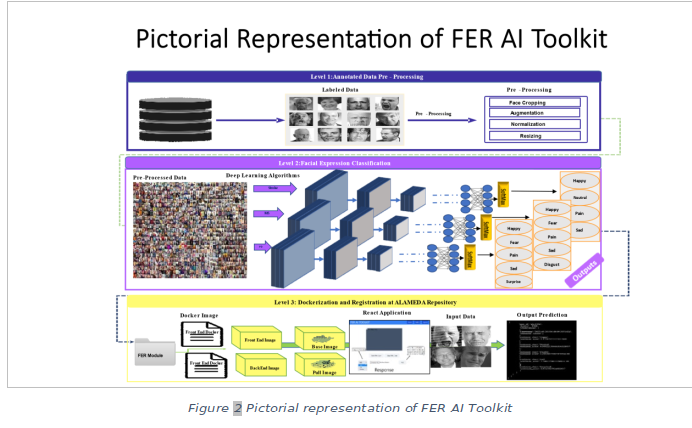

Human facial expressions plays a significant role for the human behavior analysis. Up to 55% of the total importance in efficient oral communication is attributable to body language, which includes facial expressions. Voice tone and words account for 38% and 7%, respectively. Since facial expressions can reveal important information about a patient's physical and mental health, especially in the case of neurological disorders like Parkinson's disease, stroke, MS and Alzheimer’s diseases, they can be extremely useful in the diagnosis of disease. For many neurological conditions, accurate clinical diagnosis and long-term disease monitoring can be difficult due to the need for expensive medical testing. The FER AI toolkit is the application that will assist us in our endeavors. FER AI toolkit utilizes the React and Django frameworks to create a user-friendly web application. Based on recommendations from the pilots of the Alameda consortium, the fer AI toolkit focuses on three distinct categories: PD, MS, and Stroke, with specific emotion classes assigned to each category. Separate CNN models have been trained for each category to achieve higher accuracy and improved diagnostic capabilities in identifying and differentiating these neurological conditions. The system is integrated with IAM and SemKG for authentication, data storage, and retrieval, enhancing scalability, performance, and data management. The application offers key functionalities such as category selection from a dropdown menu and image upload for processing, opening up new possibilities for understanding and interpreting human emotions through facial expressions. Factorial representation of fer AI Toolkit is given below.

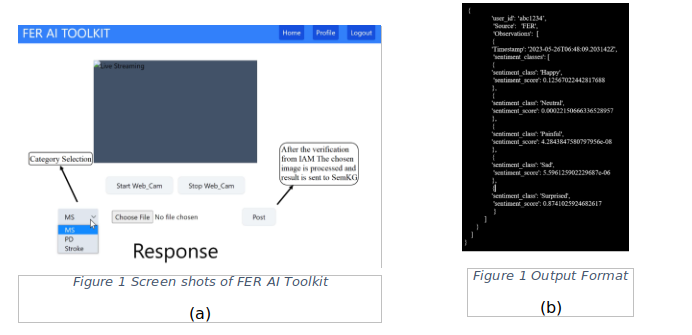

The FER AI toolkit accepts an image containing faces as an input and allows the user to select a specific category. It then utilizes the selected category to predict the probability scores for each emotion class using the trained CNN model. And generates a response in a format that is compatible with the requirements of the Alameda project, ensuring the appropriate representation and interpretation of the emotion analysis results.

Sample output is show in the image.

Figure 1 (a) provides an overview of an application that allows users to select a category and choose an image from their local computer. We got the output from FER AI Toolkit in json format which is sent to SemKG as shown in Figure 1 (b).

Access Guide:

Open the browser and type https://fer.alamedaproject.eu

Login

Provide your credentials and click submit

Data preparation

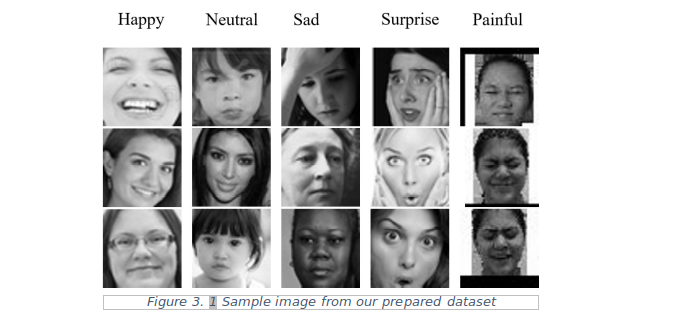

Following the recommendations of the Alameda consortium, we were tasked with creating three distinct categories (PD, MS, and Stroke) and generating facial expression datasets for each category. Specifically, for PD, we created five classes of expressions including Happy, Neutral, Painful, Sad, and Surprised. For MS, we generated five classes including Disgust, Fear, Happy, Painful, and Sad. Lastly, for Stroke, we generated four classes of expressions including Happy, Neutral, Painful, and Sad. To accomplish this we explored different available datasets like fer2013 and The Delaware Pain Database.

Dataset

We have successfully achieved our objective by merging the fer2013and The Delaware Pain Database, incorporating pain class PD, MS and Stroke. To ensure consistency, we preprocessed the Delaware pain database by converting the images to grayscale and resizing them to 48x48, matching the dimensions of fer2013. Additionally, we employed data augmentation techniques to augment the number of images in the pain class, effectively increasing the dataset to a total of three thousand pain-related images. To address the issue of class imbalance, we maintained an equal number of images in the rest of the classes (Happy, Neutral, Disgust, Sad, and Surprised).

Next step

In the upcoming version, a primary objective is to boost accuracy, with a specific focus on enhancing performance for the pain and disgust classes. To meet these objectives, an approach under consideration involves the augmentation of existing datasets by incorporating publicly available databases. The idea is to merge these additional datasets with the current ones, which would significantly expand the pool of training samples. This, in turn, could empower the model to learn more effectively and potentially yield improved accuracy when it comes to recognizing the pain and disgust classes.

Another strategy involves leveraging advanced deep learning techniques such as Generative Adversarial Networks (GANs). By utilizing GANs, we can generate synthetic images specifically designed to represent the pain and disgust classes. These generated images can then be incorporated into the training process, providing the model with more diverse and representative samples for these challenging classes.

Furthermore, we can explore the application of advanced deep learning architectures like transformers. In summary, the challenges in FER Version 2 involve enhancing accuracy for the pain and disgust classes. This can be addressed by merging publicly available databases with existing datasets to add more training samples. Additionally, employing GANs to generate images for these classes and incorporating advanced deep learning algorithms such as transformers can further contribute to improving the overall performance of the FER system.