Applications of Facial Expression Recognition in the FER AI Toolkit

Facial Expression Recognition (FER) has emerged as a crucial element in the FER AI Toolkit, enriching its capabilities in diverse domains. This document delves into the development of a custom Convolutional Neural Network (CNN) model specifically designed for FER within the toolkit.

Challenges and Innovations in FER Component

The journey of implementing FER within the FER AI Toolkit presented us with unique challenges that demanded innovative solutions. A key challenge was compliance with strict data privacy regulations, which prohibited the use of end-user’s facial data for training our FER algorithm. Moreover, training an FER model on generic data often led to suboptimal results. FER, by its nature, is highly dependent on the characteristics of the data domain, making cross-domain performance challenging. To tackle this, we harnessed a range of generic and target-based features to create an artificial intelligence solution capable of recognizing emotions across a spectrum of user facial expressions, from common emotional states to nuanced responses.

Methodology Challenge

Our methodology for developing a specialized CNN model for Facial Expression Recognition (FER) involved initial experimentation with diverse domain-specific datasets, revealing challenges in adapting the model to different domains. To tackle this, we pursued a dual approach: selecting a representative dataset rich in emotional expressions and employing small-scale datasets from varying domains for validation. We initially utilized the FER 2013 dataset and aimed to include the Dilawar Pain Database for enhanced sensitivity to pain-related expressions, though limited publicly available data posed a challenge. Additional data collection and refinement are needed in the pain class. This approach has laid the foundation for our Customized CNN model for FER, addressing domain-specificity while recognizing the need for data enrichment in specific domains.

Model Development Challenge

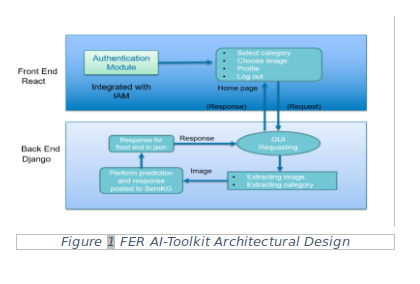

The key challenge where pre-trained models in the context of FER struggled to generalize effectively. This limitation prompted us to take a proactive approach we designed our own custom lightweight CNN model. This custom model was optimized for real-time inference and tailored for seamless application deployment. This innovation in architectural design shown in Figure 1 empowered the FER AI Toolkit to excel in both privacy compliance and real-time, real-world application scenarios, overcoming the constraints associated with pre-trained models.

Pipeline Structure

Certainly, here's the modified description of the pipeline structure for your Facial Expression Recognition (FER) system trained on images for emotion recognition using the FER2013 dataset and the Dilawar database for the pain class.

Pipeline Structure for Facial Expression Recognition

The pipeline of the FER component in our system encompasses a neural network framework integrated with a custom CNN model, specially trained for emotion recognition on facial images. When a user submits a facial image to the FER AI Toolkit, the image undergoes a sequence of preprocessing steps tailored for image-based emotion recognition. These steps include image enhancement, feature extraction, and data normalization, ensuring that the input image is in an optimal format for the deep learning model to perform accurate emotion recognition. The model’s predictions are then seamlessly translated into meaningful emotion categories based solely on the image content.

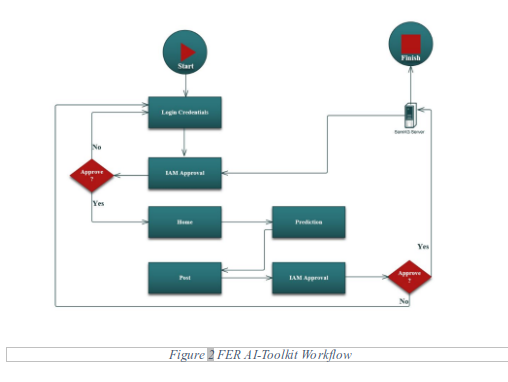

System Workflow

Future Directions for our FER System

While our existing FER model excels in the precise recognition of emotions from individual facial expressions, we have ambitious plans for further advancement. Our upcoming initiatives revolve around the integration of contextual cues, speaker personality, and emotional intent to elevate the capabilities of our FER component. Furthermore, we are actively exploring the fusion of text and image features to enhance overall performance. These strategic enhancements will empower our FER system to offer richer and more comprehensive emotion recognition, providing a holistic understanding of emotional states.

Conclusions

FER plays an integral role in expanding the capabilities of the FER AI Toolkit, with a custom CNN model at its core. With meticulous data processing and model development, we have achieved precise emotion recognition on facial images. The model’s scalability across multiple languages further enhances its performance and robustness. Our future efforts will focus on embracing conversational aspects and emotional encoding to advance the toolkit’s potential.